I’m confused about the tool called Chat GPT Zero and how it detects AI-generated content. I recently had a document flagged by Chat GPT Zero and it caused some issues for my project. I need to understand its accuracy and how to avoid false positives in the future.

Chat GPT Zero (I think you mean GPTZero) is supposed to detect whether a chunk of text was likely written by an AI like ChatGPT or a human. The way it works is kind of guessy: it looks at stuff like sentence length, how predictable the words are, repetition, and overall randomness in the text. Supposedly, AI-generated content is “too perfect,” uses less variation, and sticks to certain vocab/phrasing. In reality, though, these tools are not magic and make a lot of mistakes. If your document got flagged, it could mean you write sort of formally, or just happened to hit some of the patterns the tool looks for. Also, it can get tripped up by non-native English, translations, and even scientific/technical writing.

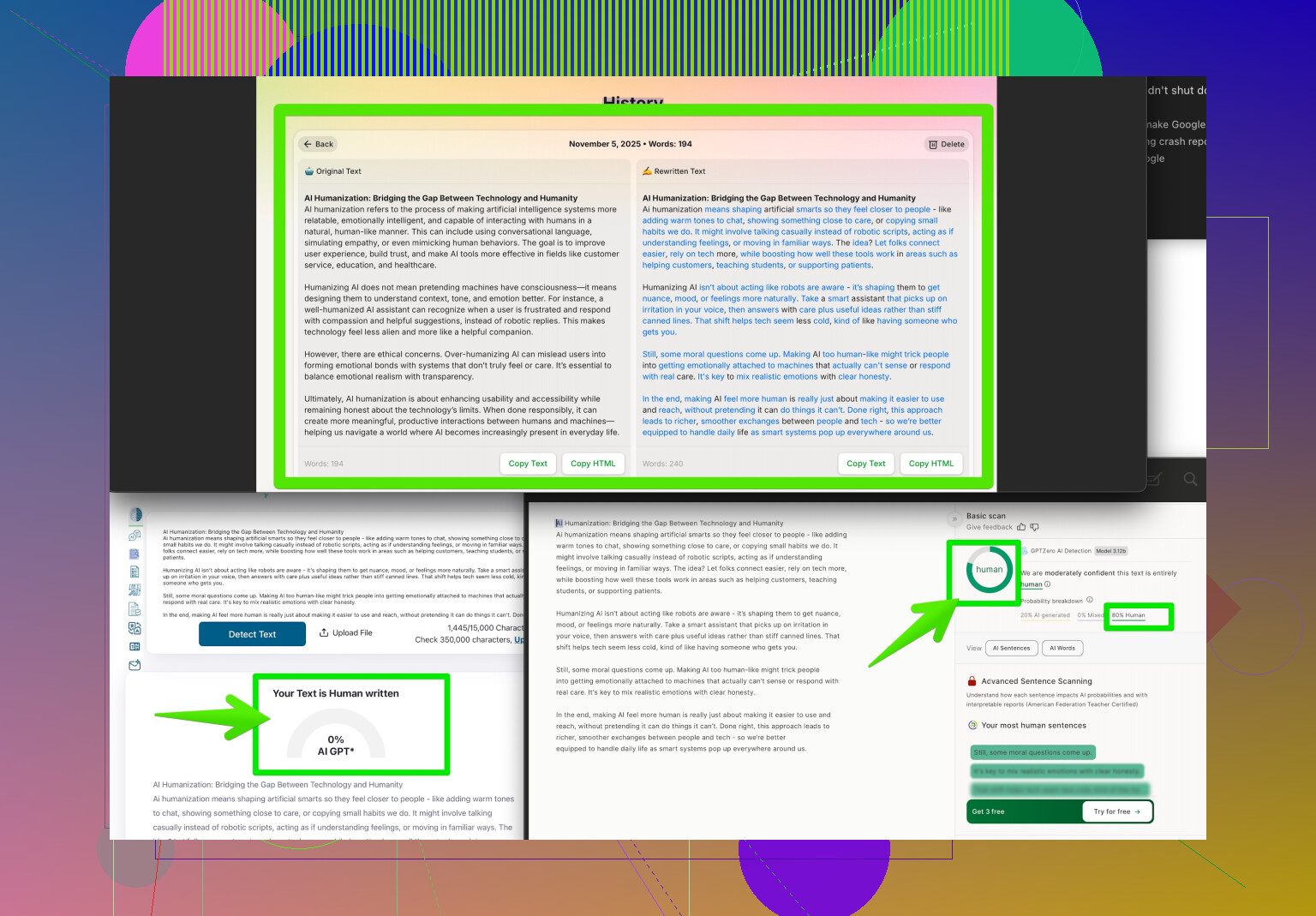

Honestly, their so-called “accuracy rates” are either unverified or just marketing hype. There’s no public dataset showing crazy high accuracy. Loads of folks on Reddit and Twitter have gotten false positives, including professors and pro writers. You really can’t take these flags as proof of anything. If you need to make your writing look more “human” (so it flies under these sketchy detectors), check out tools like making your writing sound naturally human which do a much better job of blending things to avoid the false positives most detectors spit out.

TL;DR: GPTZero guesses based on patterns, is easily fooled, and can ding real human writing all the time. It shouldn’t be treated as a judge, jury, or executioner for AI use.

Honestly, @boswandelaar nailed some good points about GPTZero (and yeah, it’s GPTZero, not “Chat GPT Zero,” they just borrowed some hype from the name). The tool is all about sniffing out those statistical “tells” that AI like ChatGPT supposedly leaves behind––like uniform sentence structure, predictability, and shiny, neat vocab. But, IMO, that’s not foolproof at all (like, has anyone at GPTZero ever graded a grad student’s essay? Same level of dryness sometimes).

Thing is, GPTZero isn’t reading your mind. It’s looking for numbers, word patterns, and the kind of repetitive structure LLMs spit out. But if you write in a formal or dense style (think research paper, business pitch, or even stuff from non-native English speakers), it might trip up and toss a big ol’ “AI Detected!” flag for no real reason. Also, let’s be real: “accuracy” gets thrown around a lot, but there’s basically no open, peer-reviewed evidence for wild success rates. Way too many stories floating around Reddit and Twitter about totally human writers getting dinged.

In your shoes? I’m a fan of taking a step back from the panic. If you keep running into issues and have to dodge these questionable AI detectors for school/job reasons, you might check out tools that humanize your writing a bit better. Heard positive buzz about Clever AI Humanizer—rumor is, it actually smooths out those “AI-ness” quirks without making your piece sound like it was run through a blender.

For some up-to-date tactics on how real people make their text sound more genuine so these AI sniffers chill out, here’s a solid, digestible guide I found: Humanizing Your Writing to Bypass AI Detectors

Short version: Don’t trust the flag as gospel. Detectors like GPTZero will keep making mistakes until language itself becomes less predictable… Which isn’t happening, lol.

Here’s the reality check: GPTZero (and tools like it) operate mainly on pattern-matching algorithms looking for “AI-ness” in your writing—think monotony, constant sentence lengths, and predictable diction. Does it actually “know” you used an AI? Not at all. If you write news articles, research reports, or even just carefully edit your own prose, you’re fair game for a false positive. Some claims about reliability and detection rates get thrown around by their PR people, but they’re rarely validated outside their own demos.

Not going to echo everything @jeff and @boswandelaar said, but one thing I’d add: those detectors can overfit HARD on what they “think” is AI, which sometimes includes advanced English learners, translated documents, or anyone with a tidy, academic style. And let’s be honest, passing an AI detector shouldn’t matter as much as whether your text is actually original and fits your assignment/purpose.

If you’re genuinely worried about future flags and want an accessible workaround, Clever AI Humanizer stands out—it adds subtle variation and makes your language feel more spontaneous, so you don’t have to re-write everything from scratch.

Pros:

- Slick at adjusting text for “human” quirks without wrecking clarity

- Not as clunky as manual rewriting or translation detours

Cons:

- Could introduce the occasional awkward phrase if overused

- Not foolproof against every possible detector update

Alternative: Some try Quillbot or paraphrasing tools, but those can kill nuance or sound robotic. Compared to stuff raised by earlier replies, Clever AI Humanizer is more of a “blend-in” solution, not just a randomizer.

Bottom line? These detectors aren’t lie detectors or plagiarism checkers—they’re guesswork at best. If you want peace of mind and less drama, mixing in tools that humanize your work (without flattening your voice) is a solid move. Don’t let the AI police stress you more than your actual project.